First and foremost, I want to thank my friend Mat for helping with the design. I couldn’t have done it without you!

As you will recall from part IV, I had a second 4U case used only to house drives. This was the starting point for my NAS server 2, case #2. I would have one case for the host system (and some drives) and a second case just for drives.

If you look at the Backblaze design, you’ll see it has space for a motherboard, 2 PSUs and 46 drives (1 is for the host OS). That’s an awful lot to squeeze into a single case! It works for them as they are racked up in a data centre with deep racks. Something would have to give in my 545mm deep ‘value’ case.

Fourty-five drives is a nice number, as it gives you 3 x 15 drive arrays. I think that’s the most it’s sensible to have in a RAID 6 configuration. Fitting up-to 11 drives in the first case, I needed to cram at least 34 in case #2.

Having verified SATA port multipliers work perfectly well, I was happy to take the same approach as Backblaze with their backplanes. What I decided to think about a bit for carefully than them was the host SATA ports. They put 20 drives on the PCI bus, but I knew from experience this would degrade performance.

Since I already had a 4-port PCIe card, it made sense to use that for 4 port-multipliers. This would give me 20 ports on a PCIe x4 bus – the drives won’t all operate at maximum speed at the same time, but there’s still plenty enough throughput to saturate a gigabit LAN. This puts the SATA port count at 26 so far. Looking for 45 in total, I would need another 19. The only practical way to do this, based on the number of PCIe slots I had was another 4-port card each hosting a 5-port multiplier/backplane. So instead of cramming one standalone port-multiplier and associated drives in the main case, we decided to put them all in case #2.

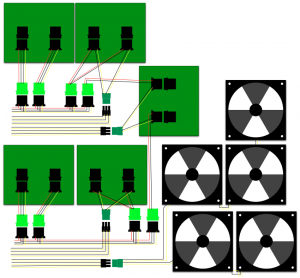

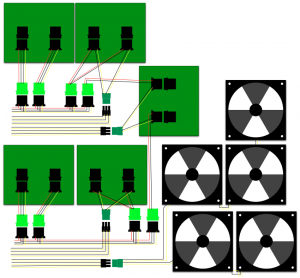

Spanning power between cases didn’t seem like the best idea, and with 40 drives now needing to be catered for, 2 PSUs had to fit in the second case. Here’s a bird’s-eye and front on view of the layout. Thanks again, Mat, for coming up with it.

The black bars going left-to-right are for reinforcement – 40 hard drives weigh a lot! The other thing to note is the orientation of the drives. Keeping them that way allows efficient air-flow, from front to back.

The black bars going left-to-right are for reinforcement – 40 hard drives weigh a lot! The other thing to note is the orientation of the drives. Keeping them that way allows efficient air-flow, from front to back.

The original intention for my 1000W PSU was to power the whole system and 22 drives. Since I’m now trying to support up to 45 drives and keep the power in each case separate, the power arrangements needed to be rethought.

I allocated the OCZ PSU to case #2 and re-purposed an old PSU I had for the main system. I calculated the amperage available for each voltage sufficient to supply 5 of the 8 port-multipliers. Maybe it would have been ‘neater’ to split it four and four, but this way, when upgrading, I wouldn’t need such a beefy supply.

Each backplane requires two molex feeds. Here’s a wiring diagram (credit once again to Mat).

Now we know pretty much what we’re building, in the next post I’ll talk about the parts and construction.

Now we know pretty much what we’re building, in the next post I’ll talk about the parts and construction.